pylops_mpi.DistributedArray#

- class pylops_mpi.DistributedArray(global_shape, base_comm=<mpi4py.MPI.Intracomm object>, base_comm_nccl=None, partition=Partition.SCATTER, axis=0, local_shapes=None, mask=None, engine='numpy', dtype=<class 'numpy.float64'>)[source]#

Distributed Numpy Arrays

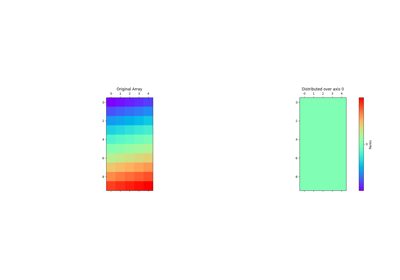

Multidimensional NumPy-like distributed arrays. It brings NumPy arrays to high-performance computing.

Warning

When setting the partition of the DistributedArray to

pylops_mpi.Partition.BROADCAST, it is crucial to be aware that any attempts to make arrays different from rank to rank will be overwritten by the actions of rank 0. This is accomplished internally by broadcasting the content of rank 0 every time a modification of the array is attempted. Such a behaviour does however incur a cost as communication may be not needed if the user ensures not to modify the content of the array in different ranks in a different way. To avoid broadcasting, one can usepylops_mpi.Partition.UNSAFE_BROADCASTinstead.- Parameters:

- global_shape

tupleorint Shape of the global array.

- base_comm

mpi4py.MPI.Comm, optional MPI Communicator over which array is distributed. Defaults to

mpi4py.MPI.COMM_WORLD.- base_comm_nccl

cupy.cuda.nccl.NcclCommunicator, optional NCCL Communicator over which array is distributed. Whenever NCCL Communicator is provided, the base_comm will be set to MPI.COMM_WORLD.

- partition

Partition, optional Broadcast, UnsafeBroadcast, or Scatter the array. Defaults to

Partition.SCATTER.- axis

int, optional Axis along which distribution occurs. Defaults to

0.- local_shapes

list, optional List of tuples or integers representing local shapes at each rank.

- mask

list, optional Mask defining subsets of ranks to consider when performing ‘global’ operations on the distributed array such as dot product or norm.

- engine

str, optional Engine used to store array (

numpyorcupy)- dtype

str, optional Type of elements in input array. Defaults to

numpy.float64.

- global_shape

Methods

__init__(global_shape[, base_comm, ...])add(dist_array)Distributed Addition of arrays

add_ghost_cells([cells_front, cells_back])Add ghost cells to the DistributedArray along the axis of partition at each rank.

asarray([masked])Global view of the array

conj()Distributed conj() method

copy()Creates a copy of the DistributedArray

dot(dist_array)Distributed Dot Product

iadd(dist_array)Distributed In-place Addition of arrays

multiply(dist_array)Distributed Element-wise multiplication

norm([ord, axis])Distributed numpy.linalg.norm method

ravel([order])Return a flattened DistributedArray

to_dist(x[, base_comm, base_comm_nccl, ...])Convert A Global Array to a Distributed Array

zeros_like()Creates a copy of the DistributedArray filled with zeros

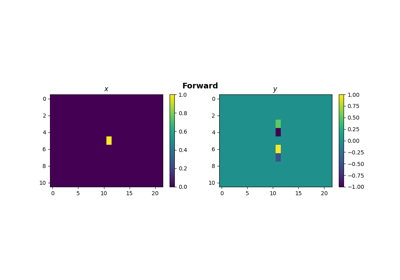

Examples using pylops_mpi.DistributedArray#

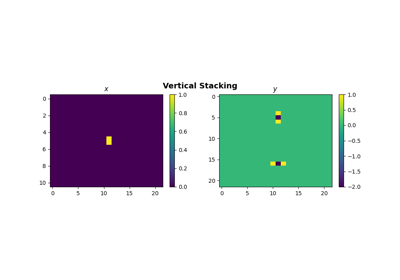

Distributed Matrix Multiplication - Block-row-column decomposition